Machine learning: how to undersample the wrong way

By Gilles Dubuc, Senior Software Engineer, Wikimedia Performance Team

For the past couple of months, in collaboration with researchers, I’ve been applying machine learning to RUM metrics in order to model the microsurvey we’ve been running since June on some wikis. The goal being to gain some insight into which RUM metrics matter most to real users.

Having never done any machine learning before, I did a few rookie mistakes. In this post I’ll explain the biggest one, which led us to believe for some time that we had built a very well-performing model.

Class imbalance

The survey we’re collecting user feedback with has a big class imbalance issue when it comes to machine learning. A lot more people are happy about the performance than people who are unhappy (a good problem to have, for sure!). In order to build a machine learning model that works, we used a common strategy to address this: undersampling. The idea is that in a binary classification, if you have too many of one of the two values, you just discard the excess data for that type.

Sounds simple, right? in Python/pandas it looks something like this:

dataset.sort_values(by=[column_prefix + 'response'], inplace=True) negative_responses_count = dataset[column_prefix + 'response'].value_counts()[-1] dataset = dataset.head(n=int(negative_responses_count) * 2)

Essentially we sort by value, with the ones we have the least values for at the top, then we used head() to get the first N records, where N is twice the amount of negative survey responses. With this, we should end up with exactly the same amount of rows for each value (negative and positive response). So far so good.

Then we apply our machine learning algorithm to the dataset (for example, for a binary classification of this kind, random forest is a good choice). At first the results were poor, and then we added a basic feature we forgot to include: time. Time of day, day of the week, day of the year, etc. When adding these, things started to work incredibly well! Surely we discovered something groundbreaking about seasonality/time-dependence in this data. Or…

I’ve made a huge mistake

A critical mistake was made in the above code snippet. The original dataset has chronological records. When we sort by “response” value, this chronological order remains, within the context of each sorted section of the dataset.

We have to perform undersampling because we have too many positive survey responses over the full timespan. We start by keeping all the negative responses, which happen over the full timespan. But we only keep the first N positive responses… which, due to the chronological ordering of records, come from a much shorter timespan. In the same dataset we end up with rows that contain negative responses ranging for example from June 1st to October 1st. And positive responses only ranging from June 1st to June 15th, for instance.

The reason why the model started giving excellent results when we introduced time as a feature, is that it basically detected the date discrepancy in our dataset! It’s pretty easy to guess that a response is likely positive if you look at its date. If the date is later than June 15th, everything in our dataset is negative responses… Our machine learning model just started excelling at detecting our mistake 🙂

A simple solution

The workaround for this issue is simply to pick N positive responses at random over the whole timespan when undersampling, to make sure that the dataset is consistent:

dataset.sort_values(by=[column_prefix + 'response'], inplace=True) negative_responses = dataset.head(n=int(negative_responses_count)) positive_responses = dataset.tail(n=int(dataset.shape[0] - negative_responses_count)) positive_responses = shuffle(positive_responses).head(n=int(negative_responses_count)) dataset = pandas.concat([negative_responses, positive_responses])

This way we ensure that we’re not introducing a time imbalance when working around our class imbalance.

About this post

This post was originally published on the Wikimedia Performance Team Phame blog.

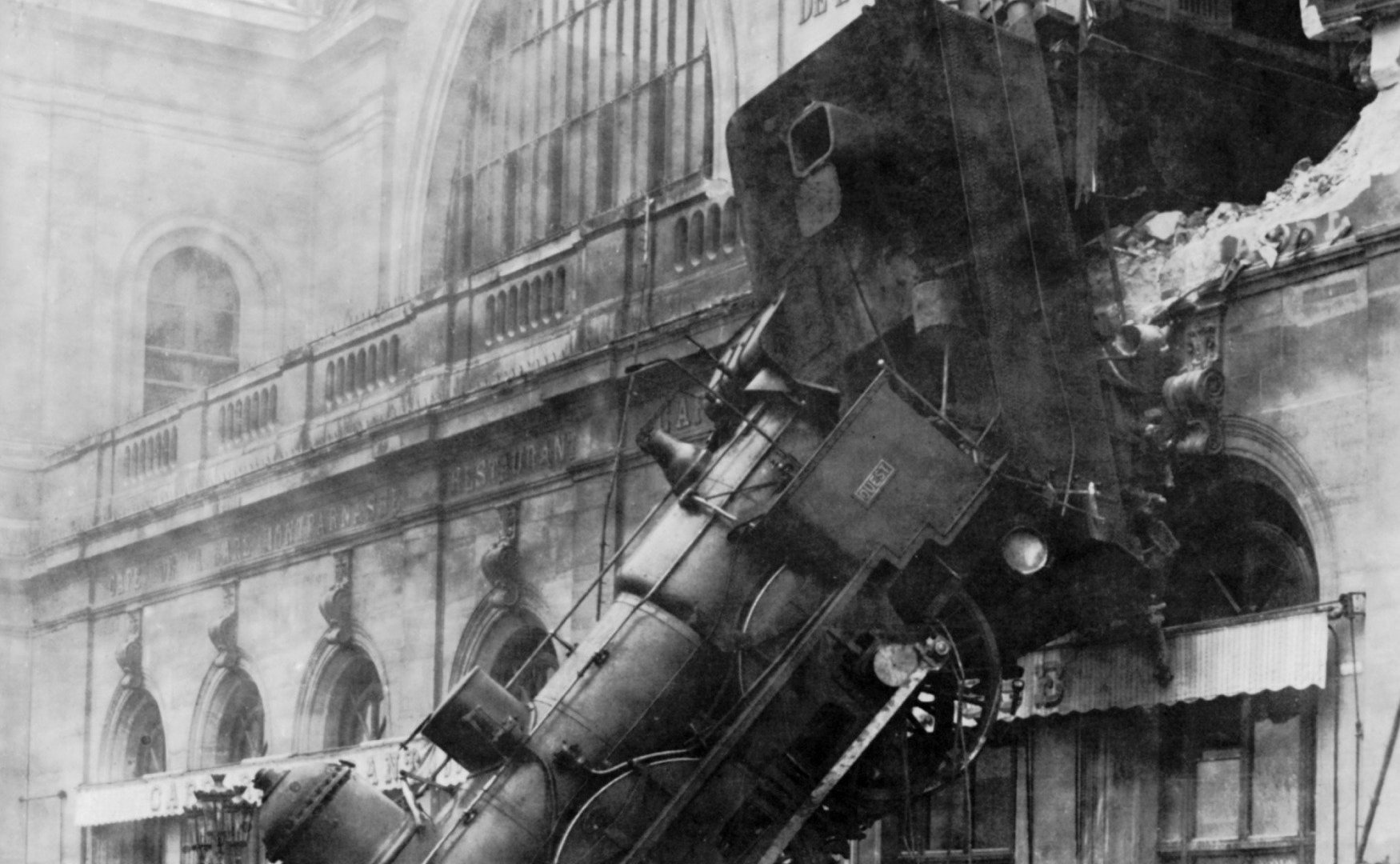

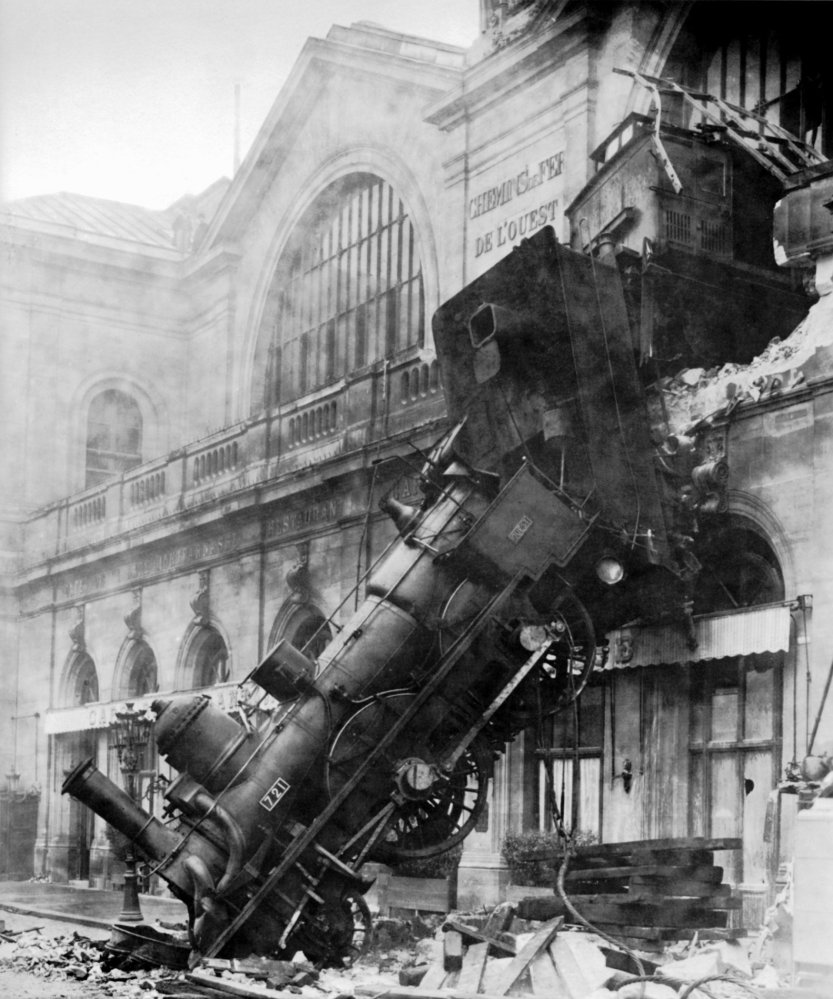

Featured image credit: Train wreck at Montparnasse 1895, Levy & fils, Public domain.