Modernizing our tech stack for serving maps at Wikipedia

By Yiannis Giannelos and the Content Transform Team

As part of our mission to provide free access to knowledge, we provide our community with infrastructure to enhance Wikipedia projects content with cartography.

Our Maps technological stack consists of services and infrastructure to allow importing open geospatial data from OpenStreetMap, visualizing them, and serving them at scale. This enables contributors to enhance articles with static or dynamic maps and map annotation as well as consume our map data as a data source for external websites. Given the ecosystem at the time our Maps stack was introduced, we built most of our software in-house and, up until recently, we relied heavily on our existing infrastructure. This eventually introduced limitations and pain points from a maintenance and operations perspective, and also made things less flexible from an architectural and development point of view.

Since late 2020, we have had the opportunity to put more effort into the evolution of our Maps stack. We carefully evaluated its current state, the open-source geospatial ecosystem and how it evolved, and most importantly how we could introduce improvements to make operating our maps more sustainable. This led to a refactoring effort in order to modernize our tech stack that we launched in the past few months.

Our main concern was improving the operational aspect of our Maps stack. Having a big monolith where everything was bundled together as one service and designed to be deployed as an all-in-one bundle per server made it difficult to decouple to smaller components and reuse existing infrastructure or other open-source solutions. Our first improvement was to replace our custom vector tile server with an open-source solution that fit our needs. After multiple assessments, we ended up using Tegola, a Golang-based vector tile server, as well as a new standard for geospatial vector data, working closely with the folks from go-spatial. On top of that, we moved from using our dedicated Maps server cluster to our production Kubernetes environment for serving vector map tiles.

Another issue we addressed was moving away from our deprecated end-of-life job queue system for tile pregeneration to something that has wider support across the organization. We started using our well-established Kafka-based event platform to produce and consume events for stale tiles that needed to be regenerated. In a similar theme, trying to reuse existing systems, we moved from our custom Cassandra-based tile storage system to use our production Openstack Swift cluster bringing greater flexibility on storing and serving tiles over HTTP.

Finally, we introduced a lot of improvements to our data pipeline for OpenStreetMap data by auditing our geospatial queries performance and simplifying the configuration management by abstracting queries in PostgreSQL. We are now using imposm, which has improved performance when importing OpenStreetMap data but it has also made schema changes and deployment failures more resilient with the ability to rollback. Overall, we managed to bring down the planet import and tile pregeneration time from almost 2 months to almost 2 weeks and introduced flexibility to move forward with other pressing issues.

As immediate next steps, we are aiming to break down more components from our Maps stack by simplifying the packaging of Karthotherian, making it a good candidate for deploying to Kubernetes and tackle a few other existing issues like the performance of the geoshapes API and the integrity of OpenStreetMap data. Finally, as part of this whole effort, we identified some opportunities for improvement that we might revisit in the future, such as client-side rendering of maps and adapting our GIS data schema to an existing community-driven specification like OpenMapTiles.

About this post

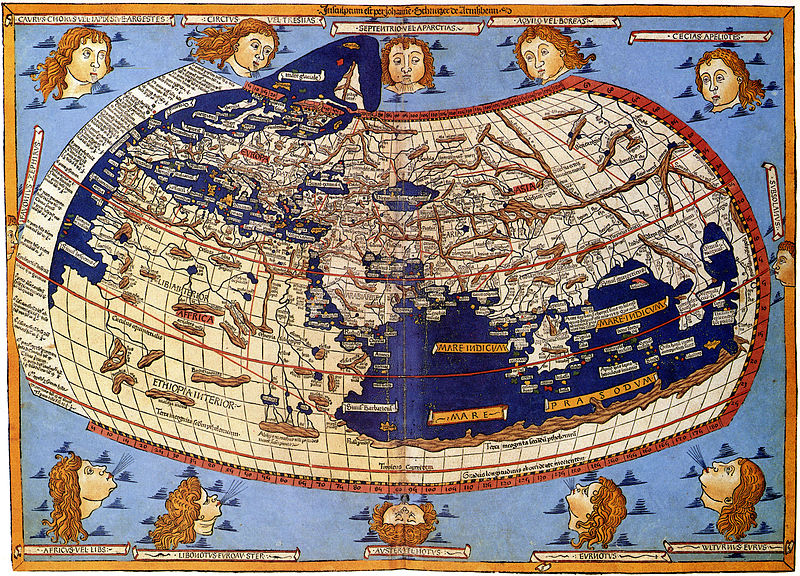

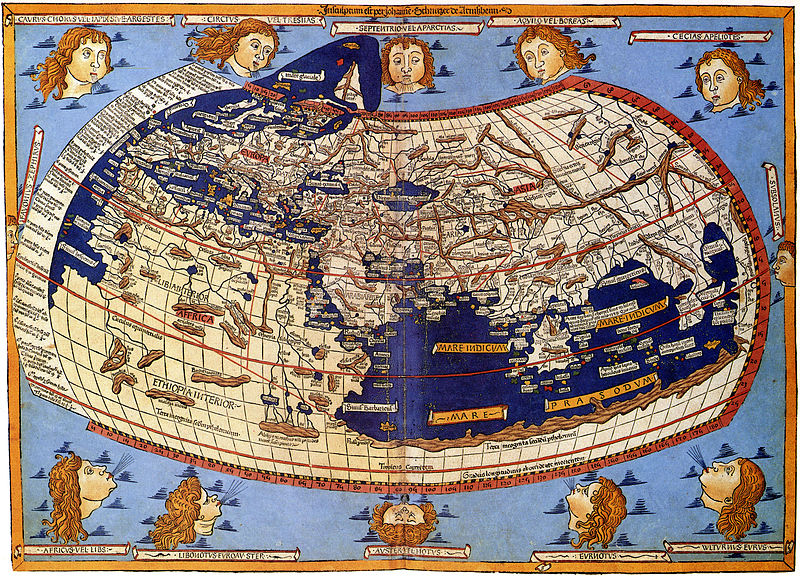

Featured image credit: 1482 Cosmographia Germanus, Public domain.