Around the world: How Wikipedia became a multi-datacenter deployment

Learn why we transitioned the MediaWiki platform to serve traffic from multiple data centers, and the challenges we faced along the way.

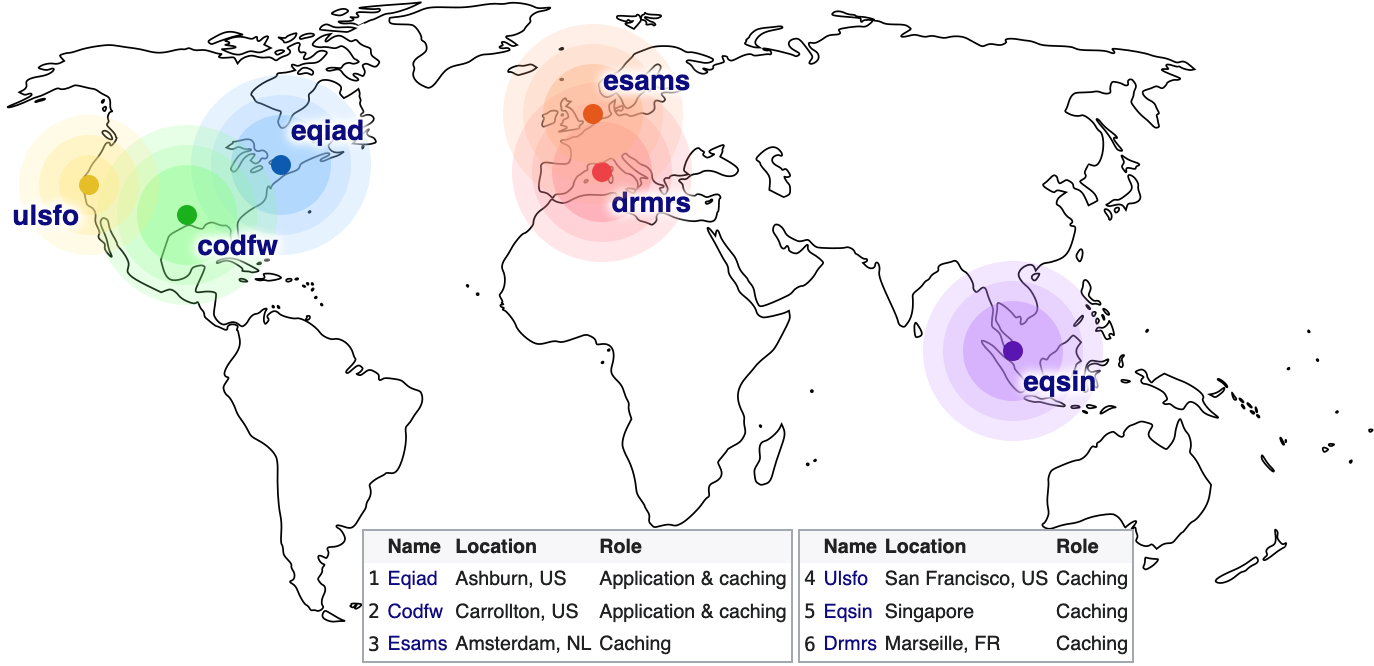

Wikimedia Foundation provides access to information for people around the globe. When you visit Wikipedia, your browser sends a web request to our servers and receives a response. Our servers are located in multiple geographically separate datacenters. This gives us the ability to quickly respond to you from the closest possible location.

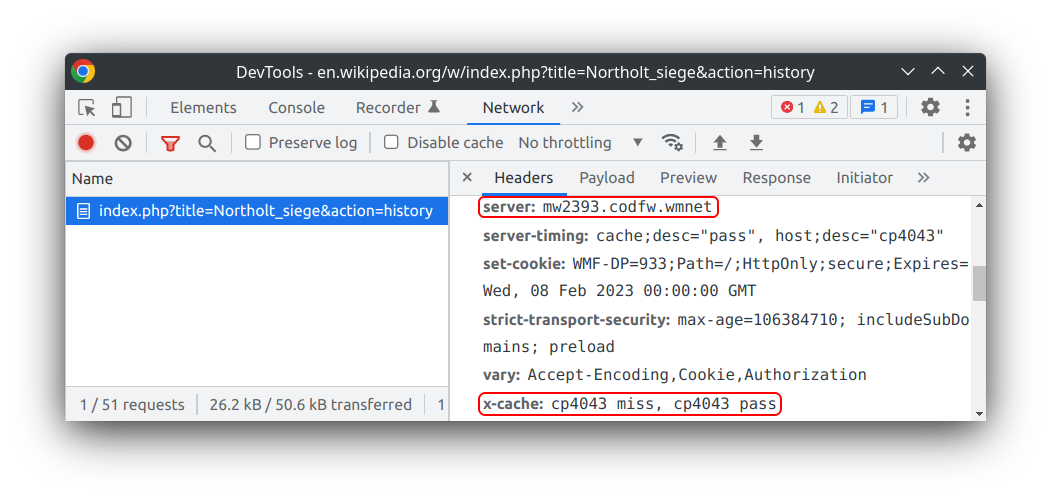

You can find out which data center is handling your requests by using the Network tab in your browser’s developer tools (e.g. right-click -> Inspect element -> Network). Refresh the page and click the top row in the table. In the “x-cache” response header, the first digit corresponds to a data center in the above map.

In the example above, we can tell from the 4 in “cp4043”, that San Francisco was chosen as my nearest caching data center. The cache did not contain a suitable response, so the 2 in “mw2393” indicates that Dallas was chosen as the application data center. These are the ones where we run the MediaWiki platform on hundreds of bare metal Apache servers. The backend response from there is then proxied via San Francisco back to me.

Why multiple data centers?

Our in-house Content Delivery Network (CDN) is deployed in multiple geographic locations. This lowers response time by reducing the distance that data must travel, through (inter)national cables and other networking infrastructure from your ISP and Internet backbones. Each caching data center that makes up our CDN, contains cache servers that remember previous responses to speed up delivery. Requests that have no matching cache entry yet, must be forwarded to a backend server in the application data center.

If these backend servers are also deployed in multiple geographies, we lower the latency for requests that are missing from the cache, or that are uncachable. Operating multiple application data centers also reduces organizational risk from catastrophic damage or connectivity loss to a single data center. To achieve this redundancy, each application data center must contain all hardware, databases, and services required to handle the full worldwide volume of our backend traffic.

Multi-region evolution of our CDN

Wikimedia started running its first datacenter in 2004, in St Petersburg, Florida. This contained all our web servers, databases, and cache servers. We designed MediaWiki, the web application that powers Wikipedia, to support cache proxies that can handle our scale of Internet traffic. This involves including Cache-Control headers, sending HTTP PURGE requests when pages are edited, and intentional limitations to ensure content renders the same for different people. We originally deployed Squid as the cache proxy software, and later replaced it with Varnish and Apache Traffic Server.

In 2005, with only minimal code changes, we deployed cache proxies in Amsterdam, Seoul, and Paris. More recently, we’ve added caching clusters in San Francisco, Singapore, and Marseille. Each significantly reduces latency from Europe and Asia.

Adding cache servers increased the overhead of cache invalidation, as the backend would send an explicit PURGE request to each cache server. After ten years of growth both in Wikipedia’s edit rate and the number of servers, we adopted a more scalable solution in 2013 in the form of a one-to-many broadcast. This eventually reaches all caching servers, through a single asynchronous message (based on UDP multicast). This was later replaced with a Kafka-based system in 2020.

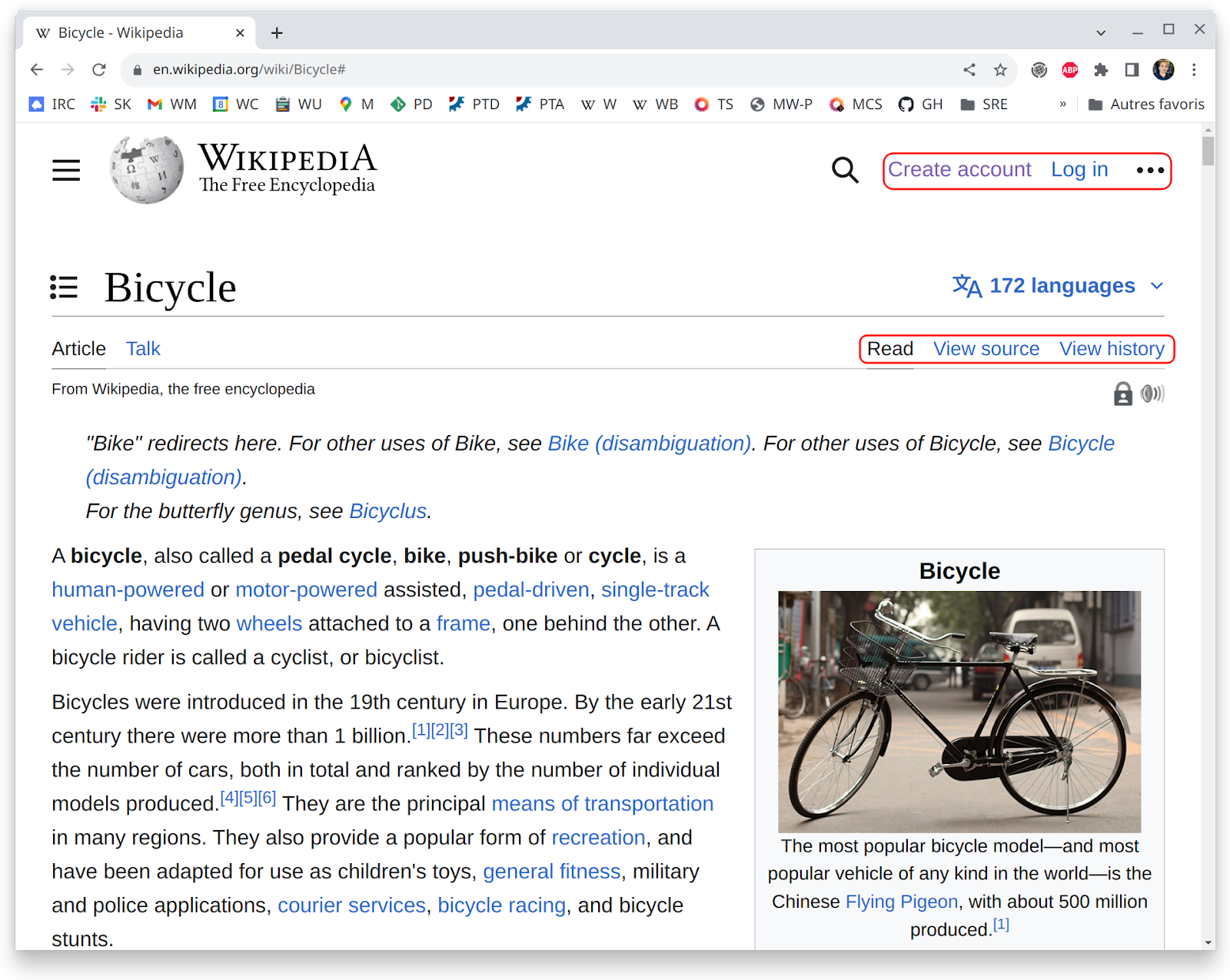

The traffic we receive from logged-in users is only a fraction of that of logged-out users, while also being difficult to cache. We forward such requests uncached to the backend application servers. When you browse Wikipedia on your device, the page can vary based on your name, interface preferences, and account permissions. Notice the elements highlighted in the example above. This kind of variation gets in the way of whole-page HTTP caching by URL.

Our highest-traffic endpoints are designed to be cacheable even for logged-in users. This includes our CSS/JavaScript delivery system (ResourceLoader), and our image thumbnails. The performance of these endpoints is essential to the critical path of page views.

Multi-region for application servers

Wikimedia Foundation began operating a secondary data center in 2014, as contingency to facilitate a quick and full recovery within minutes in the event of a disaster. We excercise full switchovers annually, and we use it throughout the year to ease maintenance through partial switchover of individual backend services.

Actively serving traffic from both data centers would add advantages over a cold-standby system:

- Requests are forwarded to closer servers, which reduces latency.

- Traffic load is spread across more hardware, instead of half sitting idle.

- No need to “warm up” caches in a standby data center prior to switching traffic from one data center to another.

- With multiple data centers in active use, there is institutional incentive to make sure each one can correctly serve live traffic. This avoids creation of services that are configured once, but not reproducible elsewhere.

We drafted several ideas into a proposal in 2015, to support multiple application data centers. Many components of the MediaWiki platform assumed operating from one backend data center. Such as assuming that a primary database is always reachable for querying, or that deleting a key from “the” Memcache cluster suffices to invalidate a cache. We needed to adopt new paradigms and patterns, deploy new infrastructure, and update existing components to accommodate these. Our seven-year journey ended in 2022, when we finally enabled concurrent use of multiple data centers!

The biggest changes that made this transition possible are outlined below.

HTTP verb traffic routing

MediaWiki was designed from the ground up to make liberal use of relational databases (e.g. MySQL). During most HTTP requests, the backend application makes several dozen round trips to its databases. This is acceptable when those databases are physically close to the web servers (<0.2ms ping time). But, this would accumulate significant delays if they are in different regions (e.g. 35ms ping time).

MediaWiki is also designed to strictly separate primary (writable) from replica (read-only) databases. This is essential at our scale. We have a CDN and hundreds of web servers behind it. As traffic grows, we can add more web servers and replica database servers as-needed. But, this requires that page views don’t put load on the primary database server — of which there can be only one! Therefore we optimize page views to rely only on queries to replica databases. This generally respects the “method” section of RFC 9110, which states that requests that modify information (such as edits) use HTTP POST requests, whereas read actions (like page views) only involve HTTP GET (or HTTP HEAD) requests.

The above pattern gave rise to the key idea that there could be a “primary” application datacenter for “write” requests, and “secondary” data centers for “read” requests. The primary databases reside in the primary datacenter, while we have MySQL replicas in both data centers. When the CDN has to forward a request to an application server, it chooses the primary datacenter for “write” requests (HTTP POST) and the closest datacenter for “read” requests (e.g. HTTP GET).

We cleaned up and migrated components of MediaWiki to fit this pattern. For pragmatic reasons, we did make a short list of exceptions. We allow certain GET requests to always route to the primary data center. The exceptions require HTTP GET for technical reasons, and change data at the same low frequency as POST requests. The final routing logic is implemented in Lua on our Apache Traffic Server proxies.

Media storage

Our first file storage and thumbnailing infrastructure relied on NFS. NetApp hardware provided mirroring to standby data centers.

By 2012, this required increasingly expensive hardware and proved difficult to maintain. We migrated media storage to Swift, a distributed file store.

As MediaWiki assumed direct file access, Aaron Schulz and Tim Starling introduced the FileBackend interface to abstract this. Each application data center has its own Swift cluster. MediaWiki tries writes to both clusters, and the “swiftrepl” background service manages consistency. When our CDN finds thumbnails absent from its cache, it forwards requests to the nearest Swift cluster.

Job queue

MediaWiki features a job queue system since 2009, for performing background tasks. We took our Redis-based job queue service, and migrated to Kafka in 2017. With Kafka, we support bidirectional and asynchronous replication. This allows MediaWiki to quickly and safely queue jobs locally within the secondary data center. Jobs are then relayed to and executed in the primary data center, near the primary databases.

The bidirectional queue helps support legacy features that discover data updates during a pageview or other HTTP GET request. Changing each of these features was not feasible in a reasonable time span. Instead, we designed the system to ensure queueing operations are equally fast and local to each data center.

In-memory object cache

MediaWiki uses Memcached as an LRU key-value store to cache frequently accessed objects. Though not as efficient as whole-page HTTP caching, this very granular cache is suitable for dynamic content.

Some MediaWiki extensions assumed that Memcached had strong consistency guarantees, or that a cache could be invalidated by setting new values at relevant keys when the underlying data changes. Although these assumptions were never valid, they worked well enough in a single data center.

We introduced WANObjectCache as a simple yet robust interface in MediaWiki. It takes care of dealing with multiple independent data centers. The system is backed by mcrouter, a Memcached proxy written by Facebook. WANObjectCache provides two basic functions: getWithSet and delete. It uses cache-aside in the local data center, and broadcasts invalidation to all data centers. We’ve migrated virtually all Memcached interactions in MediaWiki to WANObjectCache.

Parser cache

Most of a Wikipedia page is the HTML rendering of the editable content. This HTML is the result of parsing wikitext markup and expanding template macros. MediaWiki stores this in the ParserCache to improve scalability and performance. Originally, Wikipedia used its main Memcached cluster for this. In 2011, we added MySQL as the lower tier key-value store. This improved resiliency from power outages and simplified Memcached maintenance. ParserCache databases use circular replication between data centers.

Ephemeral object stash

The MainStash interface provides MediaWiki extensions on the platform with a general key-value store. Unlike Memcached, this is is a persistent store (disk-backed, to survive restarts) and replicates its values between data centers. Until now, in our single data center setup, we used Redis as our MainStash backend.

In 2022 we moved this data to MySQL, and replicate it between data centers using circular replication. Our access layer (SqlBagOStuff) adheres to a Last-Write-Wins consistency model.

Login sessions were similarly migrated away from Redis, to a new session store based on Cassandra. It has native support for multi-region clustering and tunable consistency models.

Reaping the rewards

Most multi-DC work took the form of incremental improvements and infrastructure cleanup, spread over several years. While we did find latency redunction on some of the individual changes, we mainly looked out for improvements in availability and reliability.

The final switch to “turn on” concurrent traffic to both application data centers was the HTTP verb routing. We deployed it in two stages. The first stage applied the routing logic to 2% of web traffic, to reduce risk. After monitoring and functional testing, we moved to the second stage: route 100% of traffic.

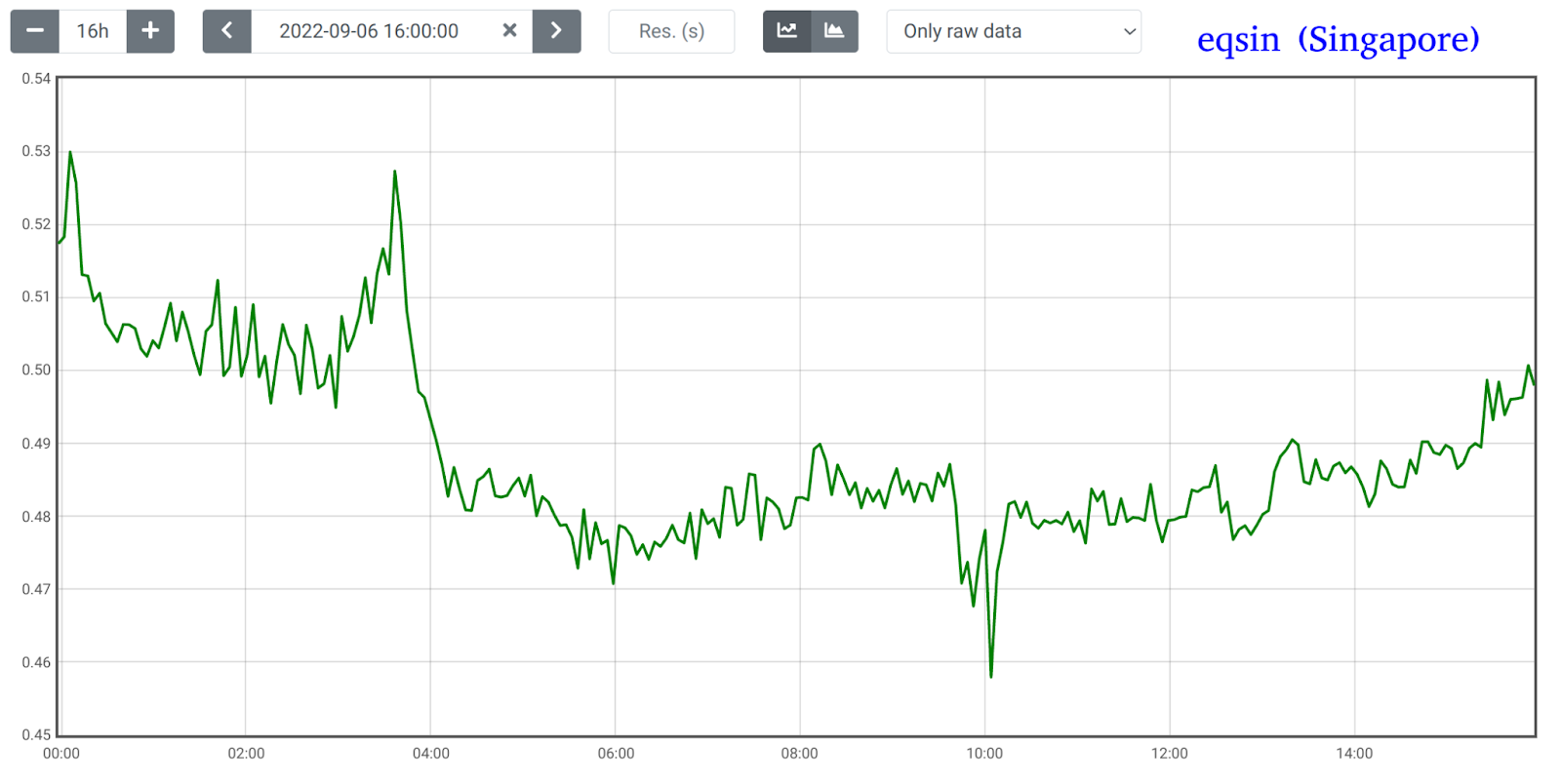

We reduced latency of “read” requests by ~15ms for users west of our data center in Carrollton (Texas, USA). For example, logged-in users within East Asia. Previously, we forwarded their CDN cache-misses to our primary data center in Ashburn (Virginia, USA). Now, we could respond from our closer, secondary, datacenter in Carrollton. This improvement is visible in the 75th percentile TTFB (Time to First Byte) graph below. The time is in seconds. Note the dip after 03:36 UTC, when we deployed the HTTP verb routing logic.

Further reading

- Wikimedia Foundation selects CyrusOne in Dallas as new data center, Mark Bergsma, 2014.

- Wikimedia’s first failover test, Mark Bergsma, 2016.

- The new data center in Singapore, Brandon Black, 2018.

- Hello, WANObjectCache, Aaron Schulz, 2019.

- One step closer to Multi-DC, Aaron Schulz, 2022.

- Documentation: WANObjectCache architecture

- Documentation: Multi-DC planning and history

About this post

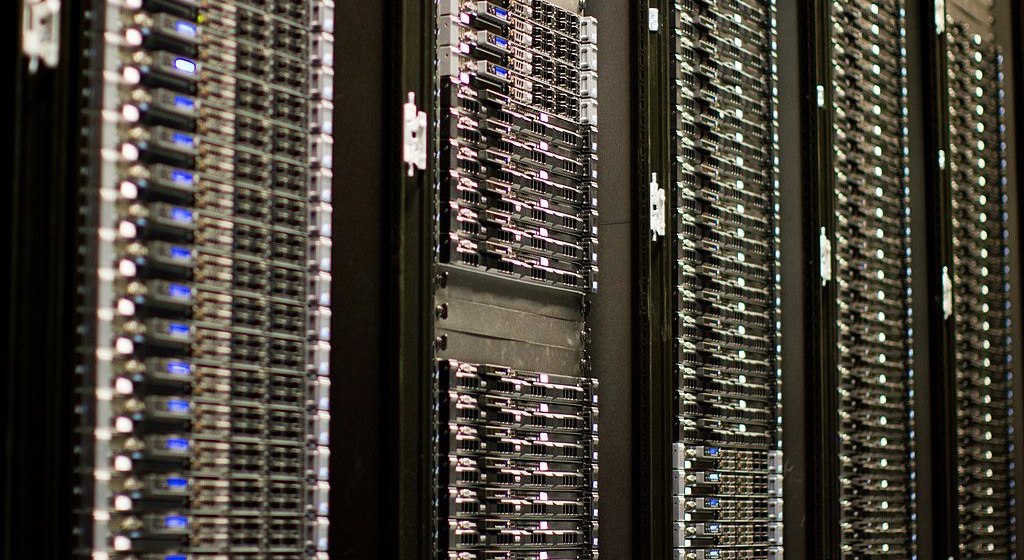

Featured image credit: Wikimedia servers by Victor Grigas, licensed CC BY-SA 3.0.

When the Foundation finally got server space in a proper data center, it was on the 13th floor of the Verizon building in downtown Tampa, Florida. The original servers were still running alongside the first 300 or so machines around the time of the ARIN registration and first audit. The presence in the Netherlands was important and gave confidence of a backup strategy. Florida may have been where it all started, but it was not a good place for a data center. Eternal thanks for connecting Mark Bergsma when most needed!

In the paragraph “Multi-region evolution of our CDN”, the link on the text “This was later replaced with a Kafka-based system in 2020.” has an apostrophe “https://wikitech.wikimedia.org/wiki/Purged'”, which prevents the link from working correctly. Should be “https://wikitech.wikimedia.org/wiki/Purged”.

Thanks Wladislav, I’ve fixed the link!