What does it mean to “keep community in the loop” when building algorithms for Wikipedia?

By C. Estelle Smith, University of Minnesota

What does it mean to “keep community in the loop” when building algorithms for Wikipedia?

-C. Estelle Smith

Imagine you’ve just created a profile on Wikipedia and spent 27 minutes working on what you earnestly thought would be a helpful edit to your favorite article. You click that bright blue “Publish changes” button for the very first time, and you see your edit go live! Weeee! But 52 seconds later, you refresh the page and discover that your edit has been wiped off the planet. How would you feel if you knew that an algorithm had contributed to this rapid reversion of all your hard work?

For the sake of illustration, let’s say you were editing a “stub” article about a woman scientist you admire. You can’t remember where you read it, but there’s this great story about how she got interested in computing. So, you spend some time writing up the story to improve her mostly empty bio. Clearly, you’re trying to be helpful. But unfortunately, you didn’t cite your source…and boom!—your work gets blown away. Without any way to understand what happened, you now feel snubbed and unwanted. Will you ever edit again?! 😱

Many edits (like yours) are damaging to Wikipedia, even if they were completed in good faith—e.g. missing citations [ ], bad grammars, mis-speled werds, and incorrect {syntax. And then there are plenty of edits that are malicious—e.g. the addition of offensive, racist, sexist, homophobic, or otherwise unacceptable content. All of these examples make it necessary for human moderators (a.k.a. “patrollers”) to review edits and revert (or fix) the bad ones. However, given the massive volume of edits to Wikipedia each day, it’s impossible for humans to review every edit, or even to identify which edits should be reviewed.

In order to make it possible(-ish) to build and maintain Wikipedia, the community absolutely requires the help of algorithmic systems. But we need these algorithmic systems to be effective community partners (think R2-D2, cheerfully supporting the Rebel Alliance!) rather than AI overlords (think Terminator…being Terminator). How can we possibly design these systems in a way that supports all of its well-intentioned community stakeholders…including patrollers, newcomers, and everyone in between?

Our team of researchers from the University of Minnesota, Carnegie Mellon University, and the Wikimedia Foundation explored this question in our new open access research paper. We used a method called Value-Sensitive Algorithm Design which has three steps:

(1) Understand community stakeholders’ values related to algorithms.

(2) Incorporate and balance these values across the full span of the ML development pipeline.

(3) Evaluate algorithms based not only on accuracy, but also on their acceptability and broader impacts.

We argue that if you follow these three steps, you can “keep community in the loop” as you build algorithmic systems, making you more likely to avoid catastrophic and community-damaging consequences. Our paper completes the first step of Value-Sensitive Algorithm Design with respect to a prominent machine learning system on Wikipedia called ORES (Objective Revision Evaluation Service).

ORES is a collection of machine learning algorithms which look at textual changes made by humans, and then, produce statistical guesses of how likely the edits are to be damaging. These guesses are continuously fed via API in real-time all across Wikipedia, as editors and patrollers complete their work in parallel.

For example, one prominent place where ORES’ guesses affect user experience is in the “Recent Changes” feed, which looks like a list that shows every new edit to the encyclopedia chronologically. Patrollers often spend time looking through the Recent Changes list, using a highlighting tool built into the interface.

If we fed an edit like yours into ORES, it might output guesses like “82% likely to be damaging” and “79% likely to be done in good faith.” The Recent Changes list could use these scores to highlight your edit in red to show that it is “moderately likely to be problematic.” Or, if the patroller wanted, it could highlight your edit in green to show that you likely meant well.

In either case, both the underlying algorithms of ORES and the highlights they generate majorly impact: (1) how the patroller interacts with your edit, and (2) whether or not you will continue editing in the future. That’s why, in our study, we wanted to understand what values should guide our design decisions with regard to systems like ORES, and how we can balance these values to lead to the best outcomes for the whole community.

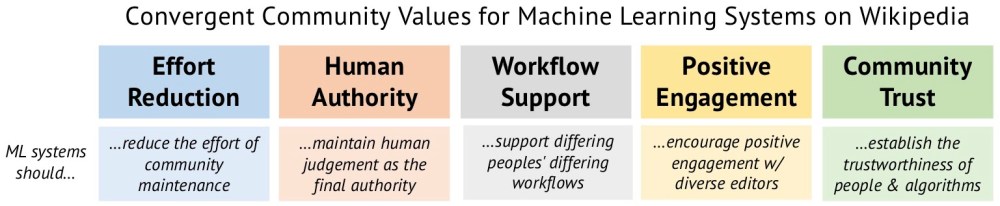

We spoke to dozens of ORES stakeholders, including editors, patrollers, tool developers, Wikimedia Foundation employees, and even researchers, in order to systematically identify which values matter to the community. The infographic above summarizes the results.

For example, one critical value is “Human Authority.” On Wikipedia, the community believes it is vitally important to avoid giving final decision-making authority to the algorithmic system itself. In other words, please, nobody build Terminator! There should never be an algorithm that gets to call the shots and make the final decision about which edits stay, and which edits go. But we do need community partners like R2-D2 to assist with “Effort Reduction” by pointing us in the right direction.

At the same time, the example of your edit shows that along with “Effort Reduction,” we also need to build systems that foster “Positive Engagement.” In other words, ORES should reduce how much work it takes for patrollers to find bad edits, and it also needs to make sure that well-intentioned community members are having positive experiences, even when their edits aren’t up to snuff.

So, maybe when ORES detects damaging (but good faith) edits in Recent Changes, those edits could receive special treatment. For example, rather than wiping out your red-highlighted edit without explanation, perhaps your edit could be allowed to stay online for just a few extra minutes. Recent Changes could take a hint from Snuggle and direct a patroller to first reach out to you before reverting, provide some scaffolded text like, “Hi @yourhandle! Thanks for making your first edit to Wikipedia! Unfortunately, our algorithm detected an issue… It seems like you meant well, so I wanted to see if you could fix this by adding a citation so that I don’t have to revert it?”

(Yes, this is challenging the BOLD, Revert, Discuss (B-R-D) paradigm, and suggesting that in some cases, B-D-R may be a more appropriate way to balance community values. Please discuss!)

In the full paper, we share our journey of applying VSAD to understand the Wikipedia community’s values, along with 25 concrete recommendations for developers interested in building ML-driven systems in complex socio-technical contexts. As you navigate community-based moderation, we hope our experiences may shed light on approaches to problems you may be experiencing in your community, as well.

Thanks for reading! Please share your thoughts in the comments, or get in touch with me @fauxneme on Wikipedia.

About this post

Featured image credit: Le pei (pôle entrepreneuriat et innovation) est à viva tech startup connect 2016, Ecole polytechnique Université Paris-Saclay, CC BY-SA 2.0