MediaWiki History: the best dataset on Wikimedia content and contributors

By Marcel Forns, Joseph Allemandou, Dan Andreescu and Nuria Ruiz, Wikimedia Analytics Team

Wikimedia projects are an integral part of how people build Free and Open knowledge on the Internet. In May 2020, for example, hundreds of thousands of active editors created 4.5 million new articles. They added and changed 60 gigabytes of content in 22 million edits. High-level numbers are fun to explore, but they don’t say a lot about the day to day experience of editing. What is it like to collaborate on these projects? What kind of work does an average editor do?

We can use the Mediawiki History Dataset to explore this together!

Let’s begin with some questions. Personally, I’ve been a little scared to jump into the fray of editing Wikipedia. Maybe it would help if I knew more about the culture?

Q: How often does a typical editor edit?

Q: How much work goes into each edit?

As we look for answers, we run into some intricacies of editing:

- Automated bots make a lot of edits. People program them to do amazing things, but right now we want to focus on humans.

- Undoing an edit can take only a second, but can change a lot of content. Counting that as “work” might skew our answer.

- Pages surrounding the articles: there is a lot of editing on talk pages, people’s own user pages, and so on. We want to focus just on the articles, but that can be tricky as pages move around.

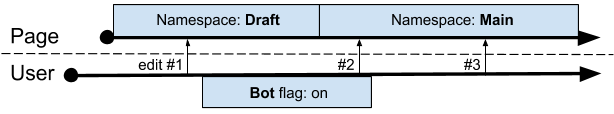

Clearing these hurdles was quite hard before we built the Mediawiki History dataset. The operational database behind Mediawiki does a great job of serving the UI but does not organize data to answer questions like the ones we’re asking here. These are analytics-style questions that depend a lot on the time dimension. To illustrate this, let’s visualize the timeline of a page, alongside the timeline of an editor:

This page started out in the Draft namespace and moved to the Main namespace. The user turned on their “Bot” flag to indicate that an automatic script was making edits on their behalf, then turned it off.

If we look at the database, it tells us what’s true right now, at the end of those timelines. According to this latest state, the user is not a bot and the page is in the Main namespace. If we count “edits by users that are not bots in the Main namespace,” we would count all three of the edits above. What we need is a way to remember the context at each point in history.

Enter the logging table. We used historical information about page moves, user renames, user group changes, and more, to historify each edit. We enrich edits with context about the page being edited and the user doing the editing. We also add fields that would be computationally expensive to get out of the database, like whether an edit was reverted in the future and how many seconds passed until that revert. Fields that end with _historical describe the state at the time of the edit. For example, whether the user was a bot when they made the edit. You can find details on each field in the schema documentation. The logic that creates this data from the Mediawiki databases is written in Scala and leverages Spark to distribute the computation.

So let’s answer the question “How often typical users edit?”

We’ll assume you load up this data in an SQL database with columns as described in the schema. The field event_user_seconds_since_previous_revision might be a mouthful, but it measures exactly what we need: how often, in seconds, a particular user edits.

Let’s take the median (50th percentile) seconds between edits, per user:

select event_user_text,

percentile(event_user_seconds_since_previous_revision, 0.50) as median

from mediawiki_history

where event_entity = ‘revision’

and event_type = ‘create’

group by event_user_textNext, we filter out bots. If the editor was in the “bot” group, we add “group” to the event_user_is_bot_by_historical array. If they had a name that contained “Bot” at the time, we add “name.” By the way, we check the name because the group wasn’t used consistently until around 2012. To exclude bots, we just check that the array is empty:

size(event_user_is_bot_by_historical) = 0

A few fields tell us whether this edit is reverted later or is a revert itself. Let’s say we don’t count reverts, as editing and filter them out:

not revision_is_identity_revert

And finally, we make sure the page was considered “content,” as opposed to a supporting page, at the time of the edit:

page_namespace_is_content_historical

Once we put all that together, we have the median time between edits for each user. As a rough answer to our question, we could average this out, which gets us:

with median_per_user as (

select event_user_text,

percentile(event_user_seconds_since_previous_revision, 0.50) as median

from wmf.mediawiki_history

where snapshot = '2020-06'

and event_entity = 'revision'

and event_type = 'create'

and size(event_user_is_bot_by_historical) = 0

and not revision_is_identity_revert

and page_namespace_is_content_historical

group by event_user_text

)

select avg(median)

from median_per_user

>>> 2186838.3628780665

2186838.3628780665 seconds is about 25 days.

To figure out the amount of content changed, we just select revision_text_bytes_diff, which is the number of bytes added or removed from the article by this edit. Looking at the absolute value of this, because it’s negative when removing content, the average median is 468 bytes, or about half of this paragraph.

While running that, we can also average the count(*), to find the average user has made 18 edits since they signed up. So, while some editors make the news with tens of thousands of articles created and the amazing work that they do, it seems that maybe a lot of the work is small incremental progress done by a lot of people. Digging into the data more will reveal distributions look like power laws, so maybe average medians are not the best representation.

An important caveat is that the logging table does not have perfect information. There are missing records, missing page and user ids, and other inconsistencies. For example, we often found pages that didn’t exist but were being deleted in a log entry. We do our best to reconcile these problems, and we will continue to improve the quality by going back and looking at the Wikitext content. But we have vetted that the data is accurate to 99.9% of the metrics we want it to compute for now. That ~0.1% can look big if it happens to be the subject of your analysis, so if you find anything like that, do let us know.

Hopefully, this exploration gives a feel for the Mediawiki History dataset, an idea of the kinds of questions it’s designed to answer. The most important thing is that this dataset is for everyone. If a new field would be useful, we are on Phabricator, let us know! We update and publish this data monthly, but are working on faster incremental updates this quarter. The Analytics mailing list is the place to get news and updates.

This dataset is the heart of Wikistats, our community-focused stats site, and is already being used by researchers to build better ways to examine and improve our movement. How will you use it?

About this post

Feature image credit: Emna Mizouni ‘I edit Wikipedia’ heart t-shirt, VGrigas (WMF), CC BY-SA 3.0