The rollout of single-sign-on (SSO) at the Wikimedia Foundation

By Moritz Mühlenhoff, Staff Site Reliability Engineer, and John Bond, Staff Site Reliability Engineer

This is the second in a three-part series that will describe the introduction of Apereo CAS as an identity provider for single sign-on (SSO) with the services operated by the Wikimedia Foundation. The first post in this series covers the original landscape of Wikimedia’s web-based services, summarizes requirements for a new SSO provider, looks at existing FLOSS solutions, and explains why Apereo CAS was chosen.

This post will cover the following topics:

- The implementation of our identity provider (IDP) setup

- How we achieve high availability

- Software deployments of CAS updates

- The gradual rollout of multi-factor authentication to users

Infrastructure

The Wikimedia Foundation operates two main data centers in the US (Virginia and Texas). Additionally, we operate cache sites in Amsterdam (Netherlands), San Francisco (USA), Singapore, and soon, Marseille (France). Most of our services are operated in both main data centers to provide redundancy to major outages affecting a complete data center. This was also needed for the new IDPs. In addition to the main IDPs, we set up two staging hosts to allow for tests without production impact.

In addition to ~ 1500 bare metal servers, we also run several clusters of Ganeti, an open-source virtual machine management solution based on KVM and DRBD. Given the relatively small memory/CPU footprint of CAS, we decided to deploy the IDPs on a VM.

Wikimedia’s servers run Debian, which provides us with OpenJDK 11 and Tomcat 9 in the current release (Buster/Debian 10).

All our servers are centrally managed via Puppet, an open-source configuration management system. We wrote a Puppet module and some profiles to manage the configuration of the IDP. The profiles use various Wikimedia-specific Puppet code. However, the core apereo_cas module works with the standard version of Puppet. You can find the module at https://gerrit.wikimedia.org/g/operations/puppet-apereo_cas. We’d be happy to incorporate changes that are generally useful.

High availability

To provide a highly available IDP service, the following bits needed to be made redundant:

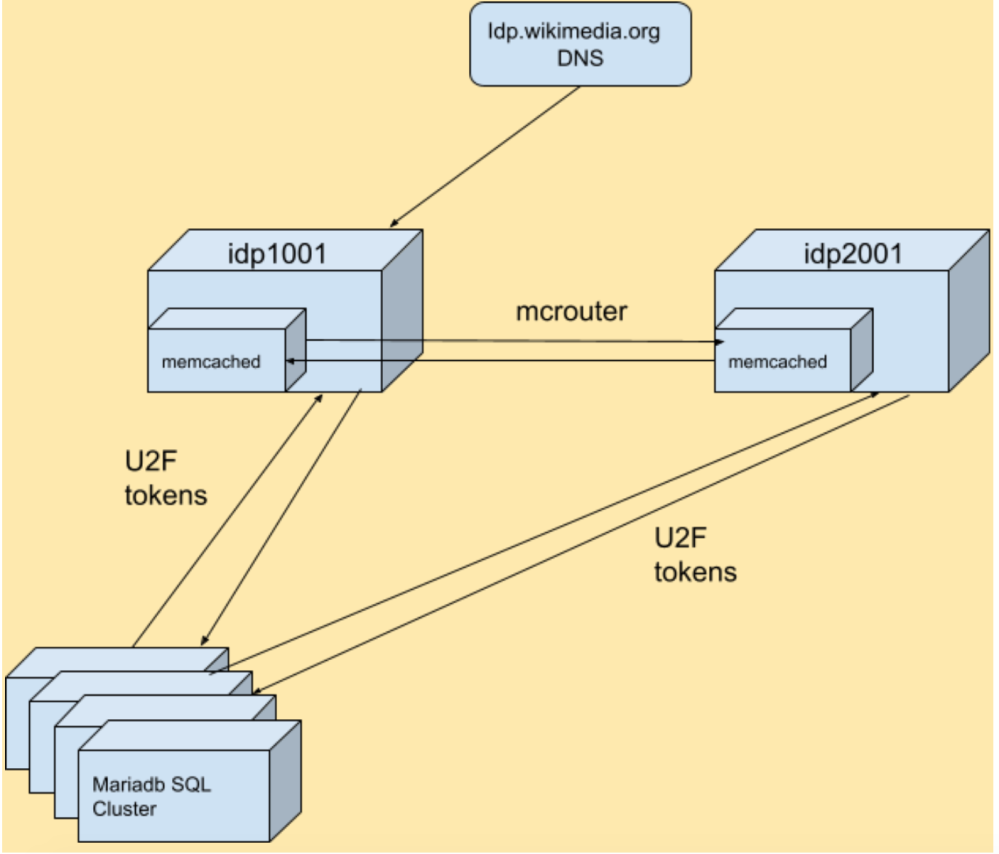

- Running an IDP server in each main data center. This is relatively simple with Puppet since we can manage the entire package and configuration state consistently. End users access the IDPs (which are currently running as idp1001.wikimedia.org and idp2001.wikimedia.org) via a simple idp.wikimedia.org DNS redirect (aka a CNAME record).

- User sessions are stored in Memcached services local to each IDP (using the TLS backend introduced in Memcached 1.6). Updates to a local instance are replicated to the secondary instance via mcrouter, a memcached protocol router originally written by Facebook.

- The U2F token IDs used for multifactor authentication are stored in Wikimedia’s highly available MariaDB SQL database cluster.

Deployment

The most common way to deploy Apereo CAS is via a WAR overlay, which is built using Gradle. Gradle keeps track of all upstream dependencies, and among other things, allows us to easily build a WAR file or a standalone JAR with an embedded Tomcat server. The standalone JAR is useful for local testing and we use the WAR file so that we can easily deploy the web application to Tomcat.

Our initial deployment forked the upstream cas-overlay-template repository into a local repository on gerrit.wikimedia.org (Wikimedia’s code collaboration platform). We do this for robustness in case the upstream repository is inaccessible, but also to track a few local modifications such as a custom Wikimedia theme to our IDP login page.

In the early phase of deployment, our Puppet code checked out the overlay repository locally, built the WAR using Gradle, and deployed the latest version. While this was fine for the initial ramp-up, we soon moved to building Debian packages (.deb) for deployments.

For this, we added support to the overlay repository to create a .deb out of the latest release. This allowed us to uncouple the build of a new version with the rollout (so that we can e.g. test a new deployment on the staging IDPs). The resulting cas.deb package ships the WAR file which depends on the Tomcat as shipped in Debian; the WAR file gets automatically deployed in the post-installation script of the .deb package. Time permitting, we plan to upstream the Debian packaging work. Interested parties can find the necessary bits in the Debian directory of our overlay repository

Second factor authentication

One of the drivers for implementing SSO at the Wikimedia Foundation was to have a centralized place where we could manage 2FA authentication policies and flows. Specifically, we wanted to deploy Universal 2nd Factor (U2F) as a second authentication factor. To allow for a gradual rollout of U2F we added an LDAP schema extension with custom attributes to select user attributes and added a Groovy script that enables U2F for any user who has it enabled in OpenLDAP via the mfa-method LDAP attribute mentioned above.

In the last part of this blog series, we’ll explain how services were integrated into the new SSO realm and what steps were taken to implement single-sign-out.

About this post

Featured image credit: TurkishHandmadePadlocks, Muscol, CC BY-SA 3.0

This is the second in a three part series of posts. The first post can be found here.