Perf Matters at Wikipedia in 2019

A large-scale study of Wikipedia’s quality of experience

Last year we reported how our extensive literature review on performance perception changed our perspective on what the field of web performance actually knows.

Existing frontend metrics correlated poorly with user-perceived performance. It became clear that the best way to understand perceived performance is still to ask people directly about their experience. We set out to run our own survey to do exactly that, and look for correlations from a range of well-known and novel performance metrics to the lived experience. We partnered with Dario Rossi, Telecom ParisTech, and Wikimedia Research to carry out the study (T187299).

While machine learning failed to explain everything, the survey unearthed many key findings. It gave us newfound appreciation for the old school Page Load Time metric, as the metric that best (or least-terribly) correlated to the real human experience.

📖 A large-scale study of Wikipedia’s quality of experience, the published paper.

Refer to Research:Study of performance perception on Meta-Wiki for the dataset, background info, and an extended technical report.

Throughout the study we blogged about various findings:

- Performance: Magic numbers

- Mobile web performance: the importance of the device

- Machine learning: how to undersample the wrong way

- Performance perception: How satisfied are Wikipedia users?

- Performance perception: The effect of late-loading banners

- Performance perception: Correlation to RUM metrics

Join the World Wide Web Consortium (W3C)

The Performance Team has been participating in web standards as individually “invited experts” for a while. We initiated the work for Wikimedia Foundation to become an W3C member organization, and by March 2019 it was official.

As a represented membership organization, we are now collaborating in W3C working groups alongside other major stakeholders to the Web!

Read more at Joining the World Wide Web Consortium

Element Timing API for Images experiment

In the search for a better user experience metric, we tried out the upcoming Element Timing API for images. This is meant to measure when a given image is displayed on-screen. We enrolled wikipedia.org in the ongoing Google Chrome origin trial for the Element Timing API.

Read all about it at Evaluating Element Timing API for Images

Event Timing API origin trial

The upcoming Event Timing API is meant to help developers identify slow event handlers on web pages. This is an area of web performance that hasn’t gotten a lot of attention, but its effects can be very frustrating for users.

Via another Chrome origin trial, this experiment gave us an opportunity to gather data, discover bugs in several MediaWiki extensions, and provide early feedback on the W3C Editor’s Draft to the browser vendors designing this API.

Read more at Tracking down slow event handlers with Event Timing

Implement a new API in upstream WebKit

We decided to commission the implementation of a browser feature that measures performance from an end-user perspective. The Paint Timing API measures when content appears on-screen for a visitor’s device. This was, until now, a largely Chrome-only feature. Being unable to measure such a basic user experience metric for Safari visitors risks long-term bias, negatively affecting over 20% of our audience. It’s essential that we maintain equitable access and keep Wikimedia sites fast for everyone.

We funded and oversaw implementation of the Paint Timing API in WebKit. We contracted Noam Rosenthal who brings experience in both web standards and upstream WebKit development.

Read more at How Wikimedia contributed Paint Timing API to WebKit

Update (April 2021): The Paint Timing API has been released in Safari 14.1!

Wikipedia’s JavaScript initialisation on a budget

ResourceLoader is Wikipedia’s delivery system for styles, scripts, and localization. It delivers JavaScript code on web pages in two stages. This design prioritizes the user experience through optimal cache performance of HTML and individual modules, and through a consistent experience between page views (i.e. no flip-flopping between pages based on when they were cache). It also achieves a great developer experience by ensuring we don’t mix incompatible versions of modules on the same page, and by ensuring rollout (and rollback) of deployments and complete worldwide in under 10 minutes.

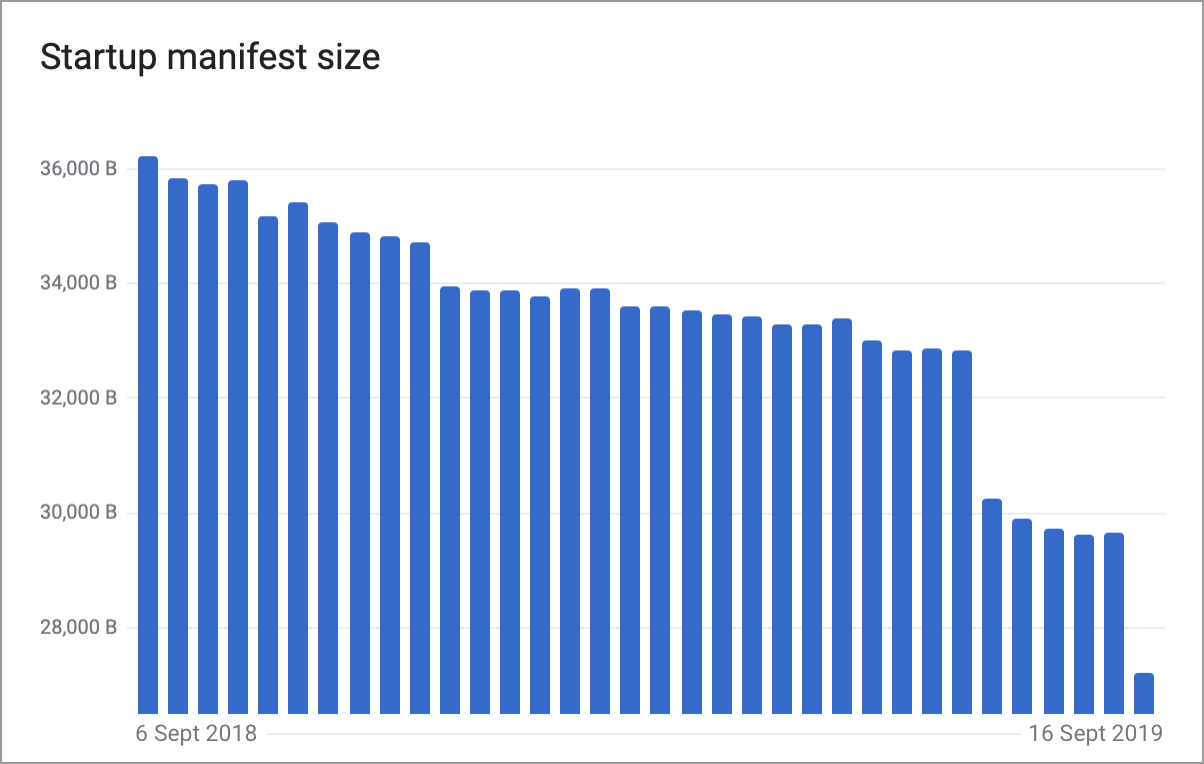

This design rests on the first stage (startup manifest) staying small. We carried out a large-scale audit that shrunk the manifest size back down, and put monitoring and guidelines in place. This work was tracked under T202154.

- Identify modules that are unused in practice. This included picking up unfinished or forgotten software deprecations, and removing code for obsolete browser compatibility.

- Consolidate modules that did not represent an application entrypoint or logical bundle. Extensions are encouraged to use directories and file splitting for internal organization. Some extensions were registering internal files and directories as public module bundles (like a linker or autoloader), thus growing the startup manifest for all page views.

- Shrink the registry holistically through clever math and improved compression.

We wrote new frontend development guides as reference material, enabling developers to understand how each stage of the page load process is impacted by different types of changes. We merged and redirected various older guides in favor of this one.

Read about it at Wikipedia’s JavaScript initialisation on a budget

Autonomous Systems performance report

We published our first AS report, which explores the experience of Wikimedia visitors by their IP network (such as mobile carriers and Internet service providers, also known as Autonomous Systems).

This new monthly report is notable for how it accounts for differences in device type and device performance, because device ownership and content choice is not equally distributed among people and regions. We believe our method creates a fair assessment that focuses specifically on the connectivity of mobile carriers and internet services providers, to Wikimedia datacenters.

The goal is to watch the evolution of these metrics over time, allowing us to identify improvements and potential pain points.

Read more at Introducing: Autonomous Systems performance report

Miscellaneous

- The team published two times in the Web Performance Calendar this year:

- Introduce automatic creation of performance metrics that measure specific chunks of MediaWiki code in core and extensions. Powered by WANObjectCache, via the new WANObjectCache keygroup dashboard in Grafana (T197849).

- Develop and launch WikimediaDebug v2 featuring inline performance profiling, dark mode, and Beta Cluster support.

Further reading

- WikimediaDebug v2 is here (2019)

- Perf Matters at Wikipedia in 2018

- Perf Matters at Wikipedia in 2017

- Perf Matters at Wikipedia in 2016

- Perf Matters at Wikipedia in 2015

About this post

Feature image by Peng LIU, licensed under Creative Commons CC0 1.0.