How we improved performance of a batch process from two days to five minutes

By Martin Urbanec, Software Engineer, Growth Team

The Growth team recently improved the performance of a script that prepares data for usage in the mentor dashboard: we decreased the average runtime of the script from more than 48 hours to less than five minutes. In this post, you will learn how we did that.

What is the mentor dashboard?

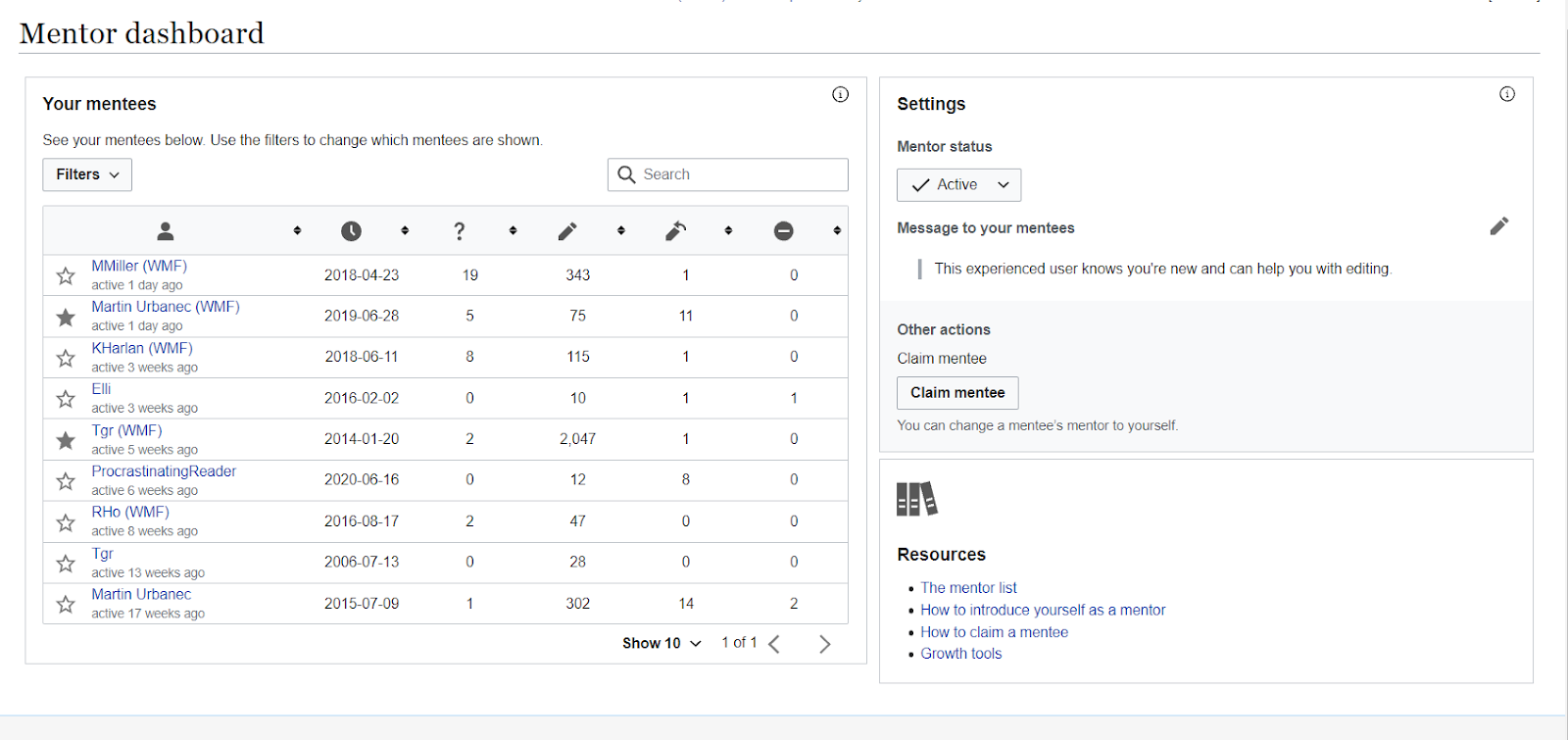

The mentor dashboard project lets community mentors view the list of newcomers that they are mentoring and some information about their editing activity. As of October 2021, the features are only available for Wikipedia projects.

For instance, the mentor dashboard lets the mentors see their mentees’ registration dates, the number of questions they asked, the total number of edits they made, the number of reverted edits they have or how many times they were blocked. This kind of information makes it possible for mentors to be more proactive. Rather than waiting for questions from their mentees, they can reach out early and offer support.

How do we get the data needed for display in the mentor dashboard?

The naive solution would be to calculate the data when a mentor loads their mentor dashboard by issuing the needed SQL queries. However, this wouldn’t scale as the number of mentees grows: a mentor can have anywhere from a few dozen to thousands of mentees. We want the mentor dashboard to load quickly, as we don’t want mentors to have to wait several seconds, or even minutes.

To work around this problem, we decided to precalculate the data in advance and to store them in a caching database table. The process responsible for updating data in the database table will be referred to as “the update process” from now on.

Since we wanted to deploy the first version of the dashboard to only four wikis (Arabic, Czech, Bengali, and Vietnamese Wikipedias), we first enabled the update process only on those four wikis. Measuring the overall runtime on those wikis showed it worked fine in terms of runtime: most of the time was spent on the Arabic Wikipedia (two to three hours). I felt that’s okay and understandable since Arabic Wikipedia has about 250k mentees to go through.

As I was preparing to deploy the mentor dashboard to more wikis, I started a test run of the update process on the French Wikipedia, to get an idea of how well it performs on that wiki. French Wikipedia has about 200k of mentees to go through, which is less than the Arabic version has. When I was starting the test run, I thought “this must take only a couple of hours too, as the number is comparable to the Arabic Wikipedia.” After the test run was completed, I was shocked to see it took more than two days.

This wasn’t acceptable. We want to run the update process daily, which means it needs to complete within 24 hours. I filed a task in Wikimedia Phabricator and started looking for options to improve the performance of the update process.

Optimizing: Part I

When I was preparing for engineering the mentor dashboard, I was experimenting with raw SQL queries in the analytics database replicas. I noticed that the “how many times was the mentee blocked” query is remarkably slow. For that reason (and without doing any profiling), I started to suspect it was slowing down the updating process.

I felt I knew why the blocks query was a slow one. Originally, that query was using JOIN conditions like user_name=REPLACE(log_title, ‘_’, ‘ ‘), meaning that the database wasn’t able to use the index for log_title. I, however, wasn’t sure how to do it more efficiently. Another member of the Growth team, Gergő Tisza, suggested that instead of doing the replaces at the database layer, I could do them at the application layer. With those changes, the query would end up using a different index: instead of iterating through all blocks the wiki placed, it would go through all events related to the mentees. Since, for most wikis, the total number of blocks is greater than the number of events per user, this approach had a chance of decreasing the runtime significantly.

After implementing the idea, the runtime decreased significantly: from 48 hours to 5.5 hours at the French Wikipedia.

Optimizing: Part II

With the performance improvement adopted in the first part, I was happy with the French Wikipedia result. It would make it possible to deploy the dashboard. To further prepare for wide deployment of the dashboard, I needed to verify the updating process would cope well with the English Wikipedia as well, which has more than 500k of mentees in just three months of Growth features being available there. Unfortunately, the English Wikipedia test run was running for more than three days, even with the Part I performance improvement, and I didn’t have enough patience to let it finish.

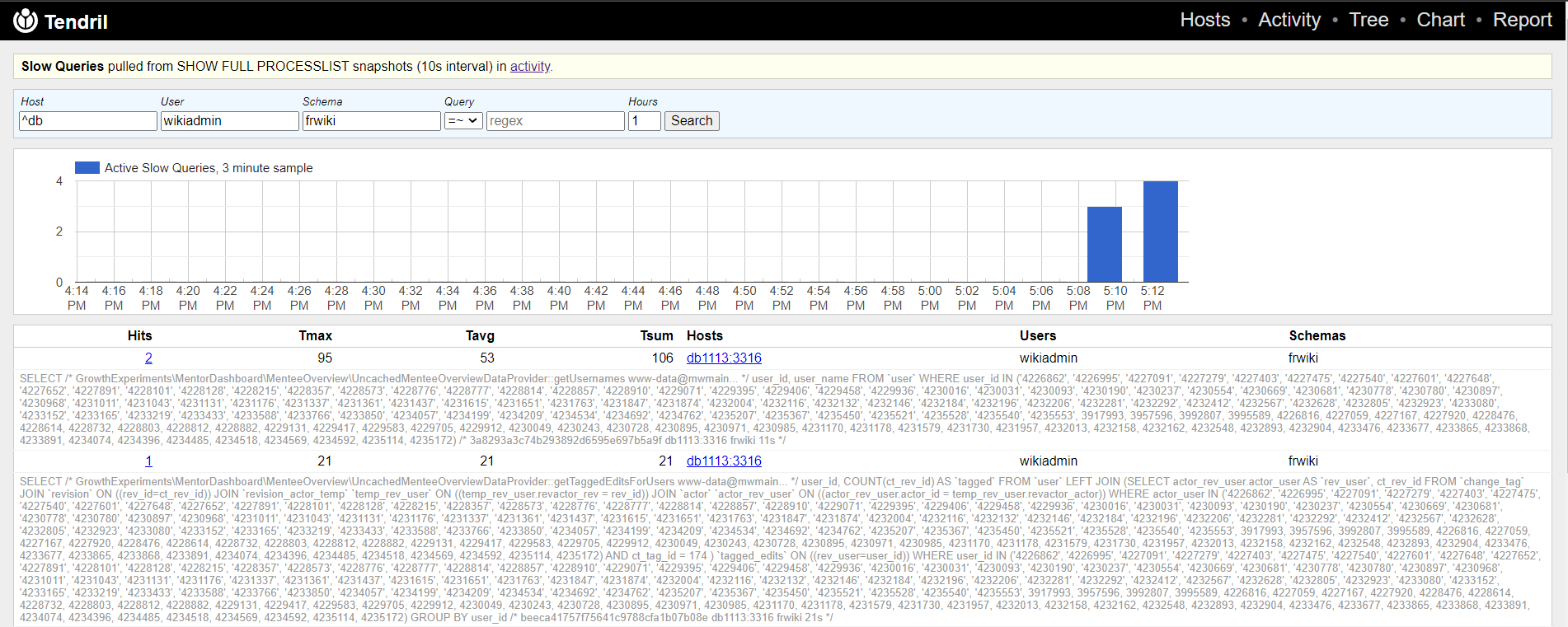

At that point, I was out of ideas about what could be slowing things down. To gather more information about the problem, I decided to consult Tendril. Tendril is a tool for analytics and performance tuning of the MariaDB servers. One of the many features it offers is the Slow queries report, which is available from the “Report” tab. To see queries by the maintenance script on French Wikipedia, I changed the user to wikiadmin (the user maintenance scripts run under) and set the schema to frwiki. Once I did so, I was able to see the queries my script made:

Figure 2: Queries executed by the update process, as shown by Tendril (image source, CC BY-SA 4.0)

I immediately noticed something was off: if you look closely at the user IDs, some of them are integers (as expected), but others are strings. Experimenting with raw SQL queries in the analytics replicas showed that casting the IDs to integers speeds up the query significantly: instead of taking half a minute, the query shown above now completes in less than a second. Once I casted the user IDs to integers, the overall update time for French Wikipedia went down from 5.5 hours to 5.5 minutes.

Conclusion

With all of the changes described above implemented, the overall update time for French Wikipedia decreased from 48 hours to 5.5 minutes. During the process, I found Tendril to be a very useful tool, as it allows me to view the actual slow queries (including information about their origin).

Given the great performance improvement accomplished, we will look into updating the information in the dashboard more frequently than just daily, as we want to offer mentors as fresh data as possible. This will also allow us to let mentors update their own data on-demand, in addition to the automated update process, without putting too much load on the database servers.

About this post

Featured image credit: File:Tendril.jpg, Electron, CC BY 2.0