What is in an edit? Automated detection of edit types on Wikipedia

By Jesse Amamgbu and Isaac Johnson

Introduction

Every month, editors on Wikipedia make somewhere between 10 and 14 million edits to Wikipedia content. While that is clearly a large amount of change, knowing what each of those edits did is surprisingly difficult to quantify. This data could support new research into edit dynamics on Wikipedia, more detailed dashboards of impact for campaigns or edit-a-thons, and new tools for patrollers or inexperienced editors. Editors themselves have long relied on diffs of the content to visually inspect and identify what an edit changed.

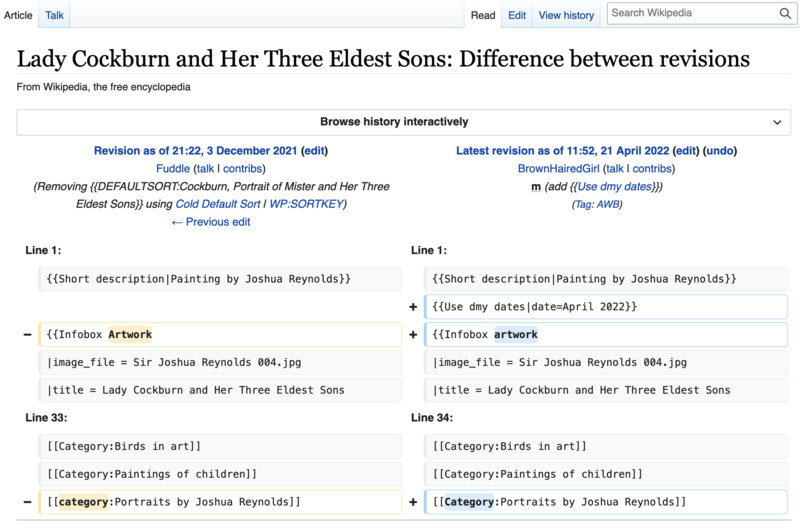

For example, Figure 1 above shows a diff from the English Wikipedia article “Lady Cockburn and Her Three Eldest Sons” in which the editor inserted a new template, changed an existing template, and changed an existing category. Someone with a knowledge of wikitext syntax can easily determine that from viewing the diff, but the diff itself just shows where changes occurred, not what they did. The VisualEditor’s diffs (example) go a step further and add some annotations, such as whether any template parameters were changed, but these structured descriptions are limited to a few types of changes. Other indicators of change – the minor edit flag, the edit summary, the size of change in bytes – are often overly simplistic and at times misleading.

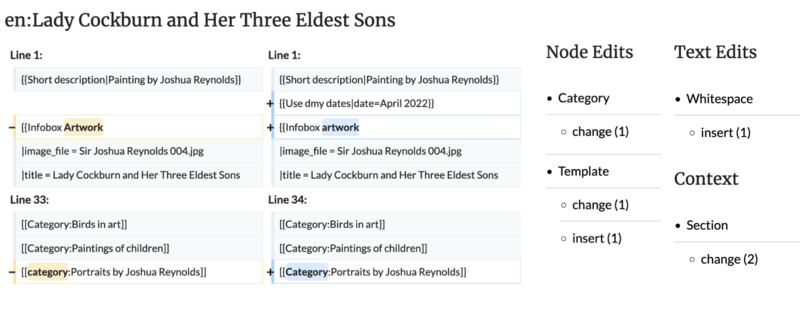

Our goal with this project was to generate diffs that provided a structured summary of the what of an edit – in effect seeking to replicate what many editors naturally do when viewing diffs on Wikipedia or the auto-generated edit summaries on Wikidata (example). For the edit to Lady Cockburn, that might look like: 1 template insert, 1 template change, 1 category change, and 1 new line across two sections (see Figure 2). Our hope is that this new functionality could have wide-ranging benefits:

- Research: support analyses similar to Antin et al. about predictors of retention for editors on Wikipedia or more nuanced understandings of effective collaboration patterns such as Kittur and Kraut.

- Dashboards: the Programs and Events Dashboard already shows measures of references and words added for campaigns and edit-a-thons, but could be expanded to include other types of changes such as images or new sections.

- Vandalism Detection: the existing ORES edit quality models already use various structured features from the diff, such as references changed or words added, but could be enhanced with a broader set of features.

- Newcomer Support: many newcomers are not aware of basic norms such as adding references for new facts or how to add templates. Tooling could potentially guide editors to the relevant policies as they edit or help identify what sorts of wikitext syntax they have not edited yet and introduce them to these concepts (more ideas).

- Tooling for Patrollers: in the same way that editors can filter their watchlists to filter out minor edits, you could also imagine them setting more fine-grained filters, such as not showing edits that only change categories or whitespace.

Background

Automated approaches for describing edits is not a new idea. While our requirements led us to build our own end-to-end system, we were able to build heavily on past efforts. Past approaches largely fit into two categories: human-intelligible and machine-intelligible. The diff in Figure 1 from Wikidiff2 is an example of human-intelligible diffs that are generally only useful if you have someone who understands wikitext interpreting it (a very valid assumption for patrollers on Wikipedia). This sort of diff has existed since the early 2000s (then called just Wikidiff).

Past research has also attempted to generate machine-intelligible diffs, primarily for machine-learning models to do higher-order tasks such as detecting vandalism or inferring editor intentions. These diffs are useful for models in that they are highly structured and quick to generate, but can be so decontextualized as to be non-useful for a person trying to understand what the edit did. An excellent example of this is the revscoring Python library, which provides a variety of tools for extracting basic features from edits such as the number of characters changed between two revisions. Most notably, this library supported work by Yang et al. to classify edits into a taxonomy of intentions – e.g., copy-editing, wikification, fact-update. These higher-order intentions require labeled data; however, that is expensive to gather from many different language communities.

We instead focus on identifying changes at the level of the different components of wikitext that comprise an article – e.g., categories, templates, words, links, formatting, images [1]. The closest analog to our goals and a major source of inspiration was the visual diffs technology, which was built in 2017 in support of VisualEditor. While its primary goal is to be human-intelligible, it does take that additional step of generating structured descriptions of what was changed for objects such as templates.

Implementation

The design of our library is based heavily on the approach taken by the Visual Diffs team [2] with four main differences:

- Visual diffs is written in Javascript and we work in Python to allow for large-scale analyses and complement a suite of other Python libraries intended to provide support for Wikimedia researchers.

- Visual diffs work with the parsed HTML content of the page, not the raw wikitext markup. Because the parsed content of pages is not easily retrievable in bulk or historically, we work with the wikitext and parse the content to convert it into something akin to an HTML DOM.

- We do not need to visually display the changes so we relax some of the constraints of Visual diffs, especially around e.g., which words were most likely changed and how.

- We need broader coverage of the structured descriptions – i.e. not just specifics for templates and links, but also how many words, lists, references, etc. also were edited.

There are four stages between the input of two raw strings of wikitext (usually a revision of page and its parent revision) and the output of what edit actions were taken:

- Parse each version of wikitext and format it as a tree of nodes – e.g., a section with text, templates, etc. nested within it. For the parsing, we depend heavily on the amazingly powerful mwparserfromhell library.

- Prune the trees down to just the sections that were changed – a major challenge with diffs is balancing accuracy with computational complexity. The preprocessing and post-processing steps are quite important to this.

- Compute a tree diff – i.e. identify the most efficient way (inserts, removals, changes, moves) to get from one tree to the other. This is the most complex and costly stage in the process.

- Compute a node diff – i.e. identify what has changed about each individual element. In particular, we do a fair bit of additional processing to summarize what changed about the text of an article (sentences, words, punctuation, whitespace). It is at this stage that we could also compute additional details such as exactly how many parameters of a template were changed etc.

Learnings

Testing was crucial to our development. Wikitext is complicated and has lots of edge cases – images appear in brackets…except for when they are in templates or galleries. Diffs are complicated to compute and have no one right answer – editors often rearrange content while editing, which can raise questions about whether content was moved with small tweaks or larger blocks of text were removed and inserted elsewhere. Interpreting the diff in a structured way forces many choices about what counts as a change to a node – is a change in text formatting just when the type of formatting changes or also when the content within it changes? Does the content in reference tags contribute to word counts? Tests forced us to record our expectations and hold ourselves accountable to them, something the Wikidiff2 team also discovered when they made improvements in 2018. No amount of tests would truly cover the richness of Wikipedia either, so we also built an interface for testing the library on random edits so we could slowly identify edge cases that we hadn’t imagined.

Parsing wikitext is not easy and though we thankfully could rely on the mwparserfromhell library for much of this, we also made a few tweaks. First, mwparserfromhell treats all wikilinks equally regardless of their namespace. This is because identifying the namespace of a link is non-trivial: the namespace prefixes vary by language edition and there are many edge cases. We decided to differentiate between standard article links, media, and category links as the three most salient types of links on Wikipedia articles. We extracted a list of valid prefixes for each language from the Siteinfo API to assist with this, which is a simple solution, but will occasionally need to be updated to the most recent list of languages and aliases. Second, mwparserfromhell has a rudimentary function for removing the syntax from content and just leaving plaintext, but it was far from perfect for our purposes. For instance, because mwparserfromhell does not distinguish between link namespaces, parameters for image size or category names are treated as text. Content from references is included (if not wrapped in a template) even though these notes do not appear in-line and often are just bibliographic. We wrote our own wrapper for deciding what was text, so that the outputs more closely adhered to what we considered to be the textual content of the page.

It is not easy to consistently identify words or sentences across Wikipedia’s over 300 languages. Many languages (like English) are written with words that are separated by spaces. Many languages are not though, either because the spaces actually separate syllables or because there are no spaces in between characters at all. While the former are easy to tokenize into words, the latter set of languages require specialized parsing or a different approach to describing the scale of changes. For now, we have borrowed a list of languages that would require specialized parsing and report character counts for them as opposed to word counts (code). For sentences, we aim for consistency across the languages. The challenge is constructing a global list of punctuation that is used to indicate the ends of sentences, including latin scripts like in this blogpost, as well as characters such as the danda or many CJK punctuation. It is challenges like these that remind us of the richness and diversity of Wikipedia.

What’s next?

We welcome researchers and developers (or folks who are just curious) to try out the library and let us know what you find! You can download the Python library yourself or test out the tool via our UI. Feedback is very welcome on the talk page or as a Github issue. We have showcased a few examples of how to apply the library to the history dumps for Wikipedia or use the diffs as inputs into machine-learning models. We hope to make the diffs more accessible as well so that they can be easily used in tools and dashboards.

While this library is generally stable, our development is likely not finished. Our initial scope was Wikipedia articles with a focus on the current format and norms of wikitext. As the scope for the library expands, additional tweaks may be necessary. The most obvious place is around types of wikilinks. Identifying media and category links is largely sufficient for the current state of Wikipedia articles, but applying this to e.g. talk pages would likely require extending this to at least include User and Wikipedia (policy) namespaces (and other aspects of signatures). Extending to historical revisions would require separating out interlanguage links.

We have attempted to develop a largely feature-complete diff library, but, for some applications, a little accuracy can be sacrificed in return for speed. For those use-cases, we have also built a simplified version that ignores document structure. The simplified library loses the ability to detect content moves or tell the difference between e.g., a category being inserted and a separate one being removed vs. a single category being changed. In exchange, it has an approximately 10x speed-up and far smaller footprint, especially for larger diffs. This can actually lead to more complete results when the full library otherwise times out.

[1] For the complete list, see: https://meta.wikimedia.org/wiki/Research:Wikipedia_Edit_Types#Edit_Types_Taxonomy

[2] For more information, see this great description by Thalia Chan from Wikimania 2017: https://www.mediawiki.org/wiki/VisualEditor/Diffs#Technology_used

About this post

Featured image credit: File:Spot the difference.jpg by Eoneill6, licensed under Creative Commons Attribution 4.0 International

Figure 1 credit: File:Wikitext diff example.png by Isaac (WMF), licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

Figure 2 credit: File:Edit types example.png by Isaac (WMF), licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

It’s fascinating to see the advances in technology that are making it possible to automatically detect edit types on Wikipedia. The ability to classify edits into different types, such as vandalism, copyediting, or adding new content, can provide valuable insights into how the platform is being used and help improve the quality of the content.

Automated edit detection can also be a useful tool for Wikipedia editors, who can use the information to prioritize their work and identify areas where attention is needed. For example, if the analysis shows that a particular page is prone to vandalism, editors can focus their efforts on monitoring and reverting those edits.

However, it’s important to remember that automated edit detection is not foolproof and may not always accurately capture the intent of the edit. Some edits may be more nuanced and require human interpretation to fully understand. Additionally, there is the risk that automated detection could be used to unfairly target certain users or groups based on biases in the algorithm.

Overall, automated detection of edit types on Wikipedia has the potential to be a valuable tool for improving the quality of content on the platform. However, it is important to use this technology carefully and in conjunction with human oversight to ensure that edits are accurately classified and that the platform remains fair and unbiased.