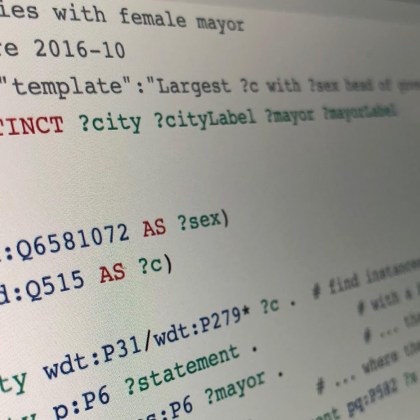

Fixing npm security issues immediately in MediaWiki projects

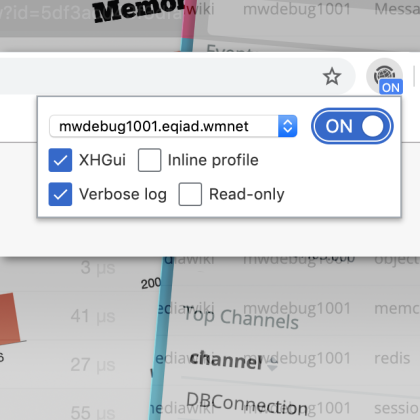

LibUp writes a commit message by mostly analyzing the diff, fixes up some changes, and pushes the commit to Gerrit to pass through CI and be merged. If npm is aware of the CVE ID for the security update, that will be mentioned in the commit message. Each package upgrade is tagged, so if you want to e.g. look for all commits that bumped MediaWiki Codesniffer to v26, it’s a quick search away.